And the Last Shall be First (At Least Occasionally)

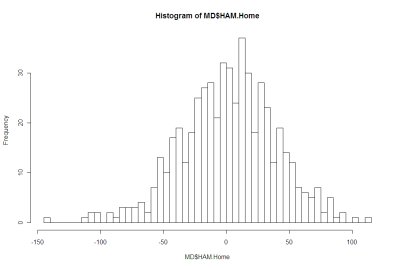

/So far we've learned that handicap-adjusted margins appear to be normally distributed with a mean of zero and a standard deviation of 37.7 points. That means that the unadjusted margin - from the favourite's viewpoint - will be normally distributed with a mean equal to minus the handicap and a standard deviation of 37.7 points. So, if we want to simulate the result of a single game we can generate a random Normal deviate (surely a statistical contradiction in terms) with this mean and standard deviation.

Alternatively, we can, if we want, work from the head-to-head prices if we're willing to assume that the overround attached to each team's price is the same. If we assume that, then the home team's probability of victory is the head-to-head price of the underdog divided by the sum of the favourite's head-to-head price and the underdog's head-to-head price.

So, for example, if the market was Carlton $3.00 / Geelong $1.36, then Carlton's probability of victory is 1.36 / (3.00 + 1.36) or about 31%. More generally let's call the probability we're considering P%.

Working backwards then we can ask: what value of x for a Normal distribution with mean 0 and standard deviation 37.7 puts P% of the distribution on the left? This value will be the appropriate handicap for this game.

Again an example might help, so let's return to the Carlton v Geelong game from earlier and ask what value of x for a Normal distribution with mean 0 and standard deviation 37.7 puts 31% of the distribution on the left? The answer is -18.5. This is the negative of the handicap that Carlton should receive, so Carlton should receive 18.5 points start. Put another way, the head-to-head prices imply that Geelong is expected to win by about 18.5 points.

With this result alone we can draw some fairly startling conclusions.

In a game with prices as per the Carlton v Geelong example above, we know that 69% of the time this match should result in a Geelong victory. But, given our empirically-based assumption about the inherent variability of a football contest, we also know that Carlton, as well as winning 31% of the time, will win by 6 goals or more about 1 time in 14, and will win by 10 goals or more a litle less than 1 time in 50. All of which is ordained to be exactly what we should expect when the underlying stochastic framework is that Geelong's victory margin should follow a Normal distribution with a mean of 18.8 points and a standard deviation of 37.7 points.

So, given only the head-to-head prices for each team, we could readily simulate the outcome of the same game as many times as we like and marvel at the frequency with which apparently extreme results occur. All this is largely because 37.7 points is a sizeable standard deviation.

Well if simulating one game is fun, imagine the joy there is to be had in simulating a whole season. And, following this logic, if simulating a season brings such bounteous enjoyment, simulating say 10,000 seasons must surely produce something close to ecstasy.

I'll let you be the judge of that.

Anyway, using the Wednesday noon (or nearest available) head-to-head TAB Sportsbet prices for each of Rounds 1 to 20, I've calculated the relevant team probabilities for each game using the method described above and then, in turn, used these probabilities to simulate the outcome of each game after first converting these probabilities into expected margins of victory.

(I could, of course, have just used the line betting handicaps but these are posted for some games on days other than Wednesday and I thought it'd be neater to use data that was all from the one day of the week. I'd also need to make an adjustment for those games where the start was 6.5 points as these are handled differently by TAB Sportsbet. In practice it probably wouldn't have made much difference.)

Next, armed with a simulation of the outcome of every game for the season, I've formed the competition ladder that these simulated results would have produced. Since my simulations are of the margins of victory and not of the actual game scores, I've needed to use points differential - that is, total points scored in all games less total points conceded - to separate teams with the same number of wins. As I've shown previously, this is almost always a distinction without a difference.

Lastly, I've repeated all this 10,000 times to generate a distribution of the ladder positions that might have eventuated for each team across an imaginary 10,000 seasons, each played under the same set of game probabilities, a summary of which I've depicted below. As you're reviewing these results keep in mind that every ladder has been produced using the same implicit probabilities derived from actual TAB Sportsbet prices for each game and so, in a sense, every ladder is completely consistent with what TAB Sportsbet 'expected'.

The variability you're seeing in teams' final ladder positions is not due to my assuming, say, that Melbourne were a strong team in one season's simulation, an average team in another simulation, and a very weak team in another. Instead, it's because even weak teams occasionally get repeatedly lucky and finish much higher up the ladder than they might reasonably expect to. You know, the glorious uncertainty of sport and all that.

Consider the row for Geelong. It tells us that, based on the average ladder position across the 10,000 simulations, Geelong ranks 1st, based on its average ladder position of 1.5. The barchart in the 3rd column shows the aggregated results for all 10,000 simulations, the leftmost bar showing how often Geelong finished 1st, the next bar how often they finished 2nd, and so on.

The column headed 1st tells us in what proportion of the simulations the relevant team finished 1st, which, for Geelong, was 68%. In the next three columns we find how often the team finished in the Top 4, the Top 8, or Last. Finally we have the team's current ladder position and then, in the column headed Diff, a comparison of the each teams' current ladder position with its ranking based on the average ladder position from the 10,000 simulations. This column provides a crude measure of how well or how poorly teams have fared relative to TAB Sportsbet's expectations, as reflected in their head-to-head prices.

Here are a few things that I find interesting about these results:

- St Kilda miss the Top 4 about 1 season in 7.

- Nine teams - Collingwood, the Dogs, Carlton, Adelaide, Brisbane, Essendon, Port Adelaide, Sydney and Hawthorn - all finish at least once in every position on the ladder. The Bulldogs, for example, top the ladder about 1 season in 25, miss the Top 8 about 1 season in 11, and finish 16th a little less often than 1 season in 1,650. Sydney, meanwhile, top the ladder about 1 season in 2,000, finish in the Top 4 about 1 season in 25, and finish last about 1 season in 46.

- The ten most-highly ranked teams from the simulations all finished in 1st place at least once. Five of them did so about 1 season in 50 or more often than this.

- Every team from ladder position 3 to 16 could, instead, have been in the Spoon position at this point in the season. Six of those teams had better than about a 1 in 20 chance of being there.

- Every team - even Melbourne - made the Top 8 in at least 1 simulated season in 200. Indeed, every team except Melbourne made it into the Top 8 about 1 season in 12 or more often.

- Hawthorn have either been significantly overestimated by the TAB Sportsbet bookie or deucedly unlucky, depending on your viewpoint. They are 5 spots lower on the ladder than the simulations suggest that should expect to be.

- In contrast, Adelaide, Essendon and West Coast are each 3 spots higher on the ladder than the simulations suggest they should be.

(In another blog I've used the same simulation methodology to simulate the last two rounds of the season and project where each team is likely to finish.)