Really Simple Proves Remarkably Effective

/The Really Simple Margin Predictors (RSMPs), which were purpose-built for season 2013, have shown themselves to be particularly accurate at forecasting game margins. So much so, in fact, that they're currently atop the MAFL Leaderboard, ahead of the more directly Bookmaker-derived Predictors like Bookie_3 that have excelled in previous years.

Both RSMPs are ensemble predictors since they pool the opinions of a set of simpler, base learners, in RSMP_Simple's case by weighting each base learner equally, and in RSMP_Weighted's case by using a weighting pattern optimised from previous season's performances.

Ensemble predictors have shown themselves to be highly effective in other domains, the trick being to find base learners that are individually strong (or, at least, not weak) yet collectively diverse. With the RSMPs, the strength in the base learners comes from using regressors that we know are good predictors of game outcomes - namely Bookmaker prices and MARS Ratings - and the diversity comes from the fact that each learner uses only a single, different regressor, albeit that the regressor in some models is a transformation of the regressor in other models.

With this approach we seem to have found, on the basis of the evidence of the season so far, an ensemble predictor to reckon with and one that's exemplifying the benefits of diversity. Since about the middle of Round 7, RSMP_Weighted has had a mean absolute prediction error (MAPE) lower than that of any of the underlying base learners, as the following diagram shows:

The zero value marks the point where the respective RSMP has MAPE equal to the best-performed base learner. Values below zero denote periods where the charted RSMP is outperforming all the base learners at that same point in time. At about game 65 both RSMP_Simple and RSMP_Weighted crossed over the zero line and led all-comers. RSMP_Weighted has maintained this superiority continuously since then, though RSMP_Simple has more recently fallen slightly behind the best of the base learners.

We can see which of the base learners was the best at any point in time from this next chart, which graphs the difference between the MAPE of each base learner and the MAPE of RSMP_Weighted after every game.

For the last few weeks it's been the learners created using the Bookmaker's Home team price, the Home team Risk-Equalising Probability, and the log odds of the Home team Overround-Equalising Probability that have been the best-performing base learners.

It's interesting to note that all the base learners seem to be converging on a MAPE about 1 point per game above RSMP_Weighted.

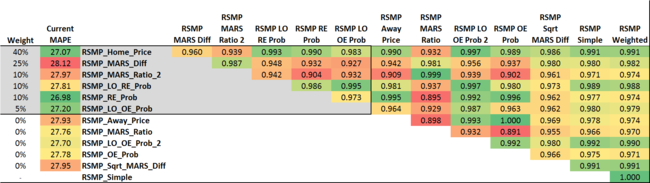

Some insight into the success of RSMP_Weighted can be gleaned by reviewing the cross-correlation matrix for the margin predictions of the base learners with each other and with RSMP_Simple and RSMP_Weighted

Only six base learners are non-zero weighted in RSMP_Weighted, and the correlations for these six are highlighted by the grey box. Note that these correlations, while high in absolute terms, include many that are relatively low in the context of all cross-correlations for all base learners. So, each of the included base learners is bringing some independent information to the ensemble, however small.

Also note that the Current MAPE column reveals the six base learners as including all of the best- and some of the worst-performed amongst the full set of learners. That said, none is so terrible that it destroys the accuracy of the ensemble entirely - RSMP_Weighted takes just enough notice of the RSMP_MARS_Diff base learner, for example, to benefit from its diversity of opinion without being excessively affected by its generally poorer performance.

(By the way, though I've not shown it explicitly in the table or in any of the charts, RSMP_Simple's MAPE currently stands at 26.98 points per game, and RSMP_Weighted's at 26.84 points per game.)